At last, with all this surrounding pressure, you finally decided to write tests. Now, you always setup TravisCI to run when code change is submitted. You now feel confident when pull-requests come in, and soon your test suite code coverage will reach 100%.

Let me be difficult to please here...

You can reach clean code heaven with one final little step: transform the tests suites into specifications!

Bad pattern

In many Open Source projects, a great attention is paid to the quality of the code itself. It is quite often that some code is merged without the tests being exhaustively reviewed.

In other words, it is not rare that the quality of the tests is not reflecting the quality of the application code.

The most frequent pattern is that all tests are implemented in the same test case [1]:

class GameTest(unittest.TestCase):

def test_update(self):

game = Game()

self.assertEqual(game.played, False)

game.score = 4

self.assertEqual(game.played, True)

game.player = 'Jean-Louis'

self.assertEqual(unicode(game), 'Jean-Louis (score: 4)')

# See bug #2780

game.score = None

self.assertEqual(unicode(game), 'Jean-Louis')

Writing tests like this has many drawbacks:

- One has to read the entire test to understand the expected behaviour of the code;

- If the test fails, it will be hard to find out what part of the code has failed precisely;

- Refactoring the code will probably mean to rewrite the entire test since instructions are inter-dependant;

- The TestCase and setUp() notions are underused.

Better pattern

A simple way to improve the quality of the tests is to see them as specifications. After all, it makes sense, you add some code for a reason! The tests are not only here to prevent regressions, but also to explicit the expected behaviour of the application!

I believe that many projects would take great benefits if following this approach [2].

# test_game.py

class InitializationTest(unittest.TestCase):

def setUp(self):

self.game = Game()

def test_default_played_status_is_false(self):

self.assertEqual(self.game.played, False)

class UpdateTest(unittest.TestCase):

def setUp(self):

self.game = Game()

self.game.player = 'Jean-Louis'

self.game.score = 4

def test_played_status_is_true_if_score_is_set(self):

self.assertEqual(self.game.played, True)

def test_string_representation_is_player_with_score_if_played(self):

self.assertEqual(unicode(self.game), 'Jean-Louis (score: 4)')

def test_string_representation_is_only_player_if_not_played(self):

# See bug #2780

self.game.score = None

self.assertEqual(unicode(self.game), 'Jean-Louis')

Writing tests like this has many advantages:

- Each case isolates a situation;

- Each test is an aspect of the specification;

- Each test is independant;

- The testing vocabulary is honored: we setup a test case;

- If a test fails, it is straightforward to understand what part of the spec was violated;

- Tests that were written when fixing bugs will explicit the expected behaviour for edge cases.

Reporting

Now that we have explicited the specs, we will want to read them properly.

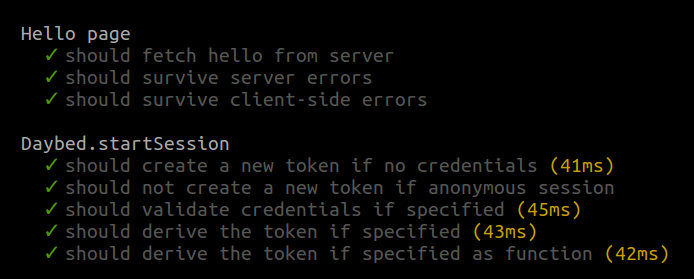

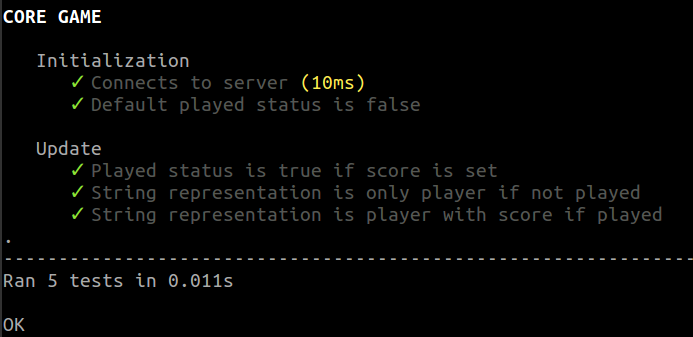

One of things I like in JavaScript is Mocha. Appart from the nice API and the very rich feature set, its default test reporter is great, tt is colourful and structurally invites you to write tests as specs.

In our project, we were using nose, so I decided to write a reporter that would produce the same output as Mocha.

You can install and use it this way:

$ pip install nose-mocha-reporter $ nosetests --with-mocha-reporter yourpackage/

It will produce the following output:

It takes the tests suites and extract the names as readable strings:

- tests/core/test_game.py → CORE GAME

- class InitializationTest(TestCase) → Initialization

- def test_played_status_is_true_if_score_is_set → Played status is true if score is set

It also mesures the execution time of each test in order highlight the slow ones.

To conclude, this reporter has a pretty modest objective: remind you that the tests you write should be read as specifications [3]!

Special thanks!

I'm very grateful to Antoine and Alex that showed me the light on this. Since they might not be conscious of the influence they had on me, I jump on the occasion to thank them loudly :)

| [1] | See a good example that I wrote in the past |

| [2] | For example, see this code I wrote later on. |

| [3] | To be honest, I haven't worked much with pytest (I probably should), and I don't know its eco-system: there might something similar... |

#tips, #opensource, #methodology - Posted in the Dev category